The digital landscape is in a constant state of flux, with artificial intelligence (AI) weaving its way into the fabric of our daily textual interactions. As AI models such as GPT-2 become increasingly sophisticated, the line between human and machine-generated content blurs, challenging us to distinguish authentic voices amidst the clamor of AI-created text. In response, an emergent class of AI content detectors has begun to surface, providing critical tools for those who value the integrity of human authorship. This exploration into the forefront of AI content detection technologies illuminates the capabilities and limitations of these tools, offering a guide for the general public to navigate this new terrain confidently.

GPT-2 Output Detector Demo by OpenAI

Harnessing the Power of AI to Detect GPT-2 Generated Text

In the ever-evolving landscape of artificial intelligence, discerning between human and AI-generated content has become a thrilling challenge. Generative Pre-trained Transformer 2 (GPT-2), developed by OpenAI, has been a game-changer in generating human-like text, compelling tech aficionados to seek out innovative solutions for detection. Here’s a look at state-of-the-art tools and techniques to identify GPT-2-generated content.

Detecting GPT-2 with AI: There’s an arms race in AI, where for every generative model, there’s a detective model. AI detectors are specifically trained to distinguish between text written by humans and text spun by the synthetic fibers of GPT-2. Employing these sophisticated AI detectors involves feeding them with vast amounts of AI-generated and human-written text to learn the subtle differences and accurately flag content.

Linguistic Quirks: GPT-2 content is eerily good, but even the smartest AI has its telltale linguistic quirks. Pay attention to overly verbose sentences, a lack of nuanced human insight, or a certain repetitiveness in word choice. AI-generated text often struggles with context and can miss the mark on cultural references or current events that a human writer would naturally weave into their prose.

Statistical Analysis: Beyond the trained eye, a mathematical approach can unveil the AI’s handiwork. Statistical analysis can identify improbable word and phrase patterns, revealing inconsistencies that are less common in human writing. This data-driven approach provides an empirical edge to the detection toolkit, sifting through text with a level of precision no layperson could achieve manually.

Cross-referencing Facts: GPT-2 may be creative, but it doesn’t always get its facts straight. Cross-referencing the information in a suspect piece with trusted sources can expose AI-generated falsehoods or inaccuracies that a human expert would avoid.

Interactive Methods: Engaging suspected AI in conversation is an experimental yet practical method to unmask the machine. Posing context-sensitive questions or seeking clarifications can trip up AI, as the limitations in understanding and responding to dynamic inquiries become apparent.

Browser Extensions: For those who want a simple, user-friendly solution, browser extensions are emerging to combat AI-written text. These plug-ins analyze content in real-time as you browse, offering peace of mind with minimal effort. These are perfect for individuals who frequently encounter a mix of human and AI-generated text in their daily digital digestion.

Machine Learning Models Trained on GPT-2 Output: By feeding machine learning models large amounts of GPT-2 output alongside human-written content, these models become adept at spotting the distinct patterns of AI-generated text. They are trained on specific idiosyncrasies that are unique to GPT-2’s style and thus can effectively flag content that bears its digital fingerprints.

Blockchain Verification: It’s time for real-time accountability with blockchain technology. Imagine a future where each piece of content comes with a verifiable history — a digital signature that validates a human author. This concept, though in nascent stages, could potentially create a ledger of authenticity for digital content, making it much harder for AI imposters to pass off their prose as human-crafted.

Embracing the Intersection of Creation and Detection: Tech enthusiasts understand the allure of new AI capabilities but also recognize the importance of staying ahead of the curve in detection. In the race between AI content generators and detectors, staying informed about cutting-edge technology is crucial. As GPT-2 and similar models evolve, so too must the tools designed to distinguish machine-authored text from the human intellect’s creation.

Hugging Face’s Transformer Neural Network Model

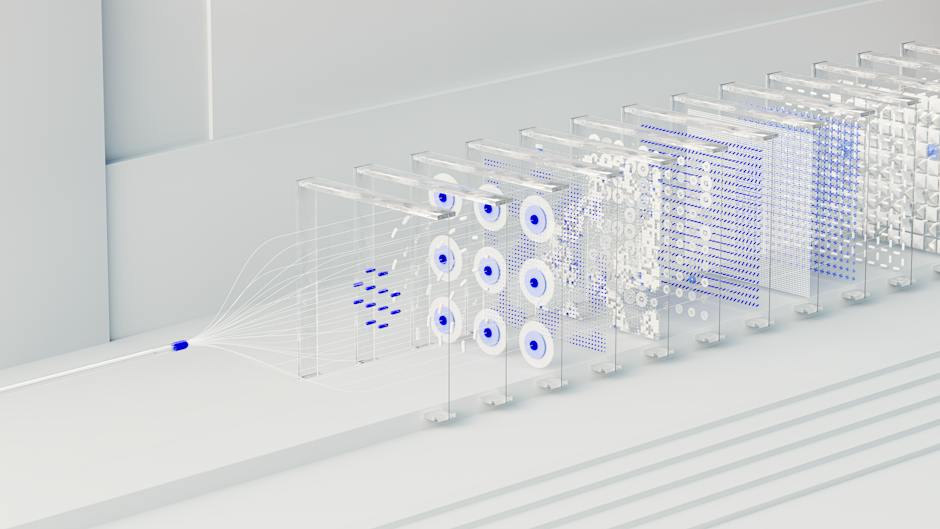

Harnessing Deep Learning Model Architecture for Enhanced Detection Capabilities

The advancement of AI technology has brought the prowess of deep learning models to the forefront of text verification processes. These sophisticated models, constructed on neural network architectures, are escalating the arms race against AI-generated content. Consider a deep learning model that builds upon the transformer architecture, surpassing earlier generations in its recognition capabilities. It scrutinizes linguistic subtleties and coherency levels within the content, something mere statistical methods may miss.

Leveraging Model Uncertainty for Diagnostics

One of the cutting-edge strategies involves exploiting the intrinsic uncertainty of a deep learning model during the content generation process. By analyzing the degree of certainty a model exhibits when producing or evaluating sequences of text, tech enthusiasts are developing methods to flag potential AI-written sections. Content that prompts unusually high levels of uncertainty in prediction could provide a clear signal of non-human intervention.

Incorporating Semantic Analysis Tools

A newer angle takes semantic analysis into the equation. Beyond just the surface level quirks or statistical patterns, semantic analysis tools delve into the actual meaning carried by the text. These tools, now increasingly integrated into deep learning architectures, examine context relevance and inferential logic. By doing so, the models can mark text that, despite seeming plausible, deviates in subtle semantic ways from what a human would be likely to produce.

Adapting to Evolving Language Models

As language models continue to evolve, so do the deep learning models designed to detect them. Adaptability is key. Machine learning enthusiasts are pushing for systems that self-improve by constantly consuming new AI-generated text as part of their training data. The result? A detection system that stays as dynamic and ever-adaptive as the AI writers it’s designed to catch.

Collaborative Human-AI Efforts

The synergy between human insight and AI’s brute-force analysis is a force to be reckoned with. Crowdsourced annotations combined with deep learning model outputs create a hybrid detection force. In this collaboration, the contextual and cultural knowledge of humans bridges the gaps that AI might still overlook, resulting in a nuanced, robust detection mechanism.

Deep learning models are not just passive filters but active participants in the realm of content authenticity. Their continuous development reflects the tech community’s commitment to maintaining a landscape where human creativity and machine efficiency coexist and are clearly distinguishable. With such technology in the works, the question isn’t whether AI-written text will be detectable, but how swiftly and seamlessly these systems will integrate into the daily flow of information consumption.

Botometer by OSoMe

Leveraging the Social Graph for Bot Detection

Turn attention to the interconnectivity of social media profiles. Real users often have a rich web of connections reflecting years of interactions, whereas bots may have artificially generated or shallow networks. Advanced algorithms can now analyze these social graphs, looking for patterns that betray a non-human hand in account creation and maintenance.

Sentiment Consistency and Behavioral Patterns

Dive into the subtleties of sentiment and behavior. Bots programmed to sway public opinion on hot topics might display unnatural consistency in sentiment or deviate from typical human emotional fluctuations. Profile activity monitoring tools parse through posts and comments, searching for these anomalous patterns to flag potential bots.

Profile Image and Metadata Analysis

Place focus on the visuals. AI-driven reverse image searches combined with metadata scrutiny can unearth reused or stock images commonly employed by bot creators. Some forensic tools even detect slight digital manipulations, often missed by the human eye, that point to synthetic origins.

Timing and Geolocation Discrepancies

Time is of essence. Authentic users tend to operate within reasonable time zones and patterns reflective of human behavior, which bots often ignore. By examining account activity timestamps and geolocation tagging, detection systems can identify inconsistencies hinting at automated operation.

Engagement and Response Rate Analysis

Evaluate engagement levels. Bots may either be overly active, responding to stimuli at superhuman speed, or suspiciously dormant until triggered for a specific campaign. Analyzing the frequency and timing of interactions relative to real-world events can identify aberrations indicative of non-human actors.

Use of Honeytokens and Decoys

Set digital traps known as honeytokens, such as fake accounts or links seeded across social media. They act as bait to lure bots, which, unlike discerning humans, may interact with these decoys without skepticism. Such engagements are monitored and traced back to the source for confirmation of automated activity.

Implementing CAPTCHAs and Challenge-Response Tests

Challenge potential bots with CAPTCHAs and puzzles that most AI cannot solve. Integrated seamlessly into social platforms, these can act as gatekeepers, distinguishing between human users and bots attempting to infiltrate or engage with content on the network.

Network Similarity Analysis

Compare accounts against confirmed bot profiles. This involves a detailed analysis of attributes such as posting frequency, content similarity, and network behavior to identify clusters of bot-operated accounts functioning under similar parameters, revealing concerted influence campaigns.

Harnessing Behavioral Biometrics

Turn to behavioral biometrics for a layer of depth. By analyzing keystroke dynamics, mouse movements, and other interaction patterns, platforms can differentiate between the organic activities of a human and the scripted responses of bots, which lack the nuances of human behavior.

Collaborating with Human Analysts

No system is complete without the irreplaceable insight of human intuition. Combine the ingenuity of tech-savvy operatives with sophisticated tools to verify and confirm suspicions raised by automated processes. Drawing on diverse experience, people can spot and understand the complex subtleties of bot behaviors in ways purely algorithmic approaches may miss.

In conclusion, with technological advancement roaring at full throttle, the race to unmask social media bots is on. Utilizing a combination of leading-edge techniques rooted in deep learning, human-AI interaction, and behavior analysis, the line between genuine and artificial is becoming clearer. Whether it’s through the examination of social patterns or the implementation of digital decoys, it’s now more possible than ever to ensure the integrity of social media discourse. This is the new frontier, and staying a step ahead of the ever-evolving landscape is not just a challenge, but a necessity for the technology-invested enthusiast.

Photo by alterego_swiss on Unsplash

The seamless integration of AI into content creation summons a pressing need for vigilant discernment in the consumption of information. By embracing the power of cutting-edge detection technologies, we arm ourselves with the knowledge to uphold the authenticity of our collective discourse. Continuing to track the developments of these AI detectors not only enriches our understanding but also fosters a more transparent and trustworthy textual ecosystem. As we engage with an array of content daily, let us be steadfast in our commitment to value and preserve the human element that underpins the unique tapestry of our shared narratives.

Writio: Your AI writing partner crafting exceptional content! Experience top-notch articles, SEO tracking, and automated publishing. This article was written by Writio.